Slack alerts for Salesforce

— salesforce, monitoring — 6 min read

This is a part of series of blog posts about advanced monitoring techniques for your Salesforce.com application so your business can rely on the tech team.

So far, we've covered:

and we are now going to integrate Slack with Salesforce.com for simple monitoring.

Intro

Slack is slowly growing as the de facto standard for team communication. Some teams rely on their team Slack channel for status updates of builds from their Jenkins jobs, GitHub PRs and JIRA integrations.

As a part of monitoring the Salesforce org, we are going to integrate Salesforce.com with Slack. We are going to use this integration to push any alerts we think are important to the team.

A sample problem we are going to address is this: Let us say our org uses API calls to external system to get data. We are going to monitor the number of API calls used and if it gets closer to the limit, we will send a notification to the team Slack channel.

The Salesforce to Slack integration can solve many other problems, but for this example, let us focus on the API call problem.

Here's what we are going to achieve

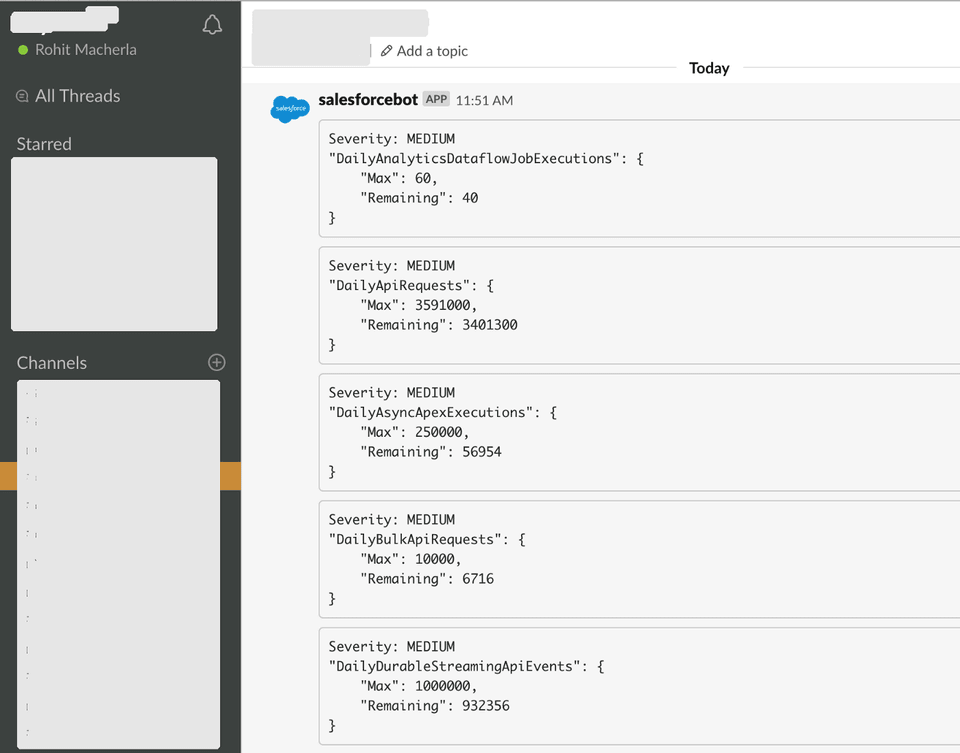

A Slack channel that shows Salesforce.com alerts: Governor Limits alerts in the picture

A Slack channel that shows Salesforce.com alerts: Governor Limits alerts in the picture

To make it work, such an alerting mechanism must run frequently, and once per minute seems a good place to start. You may want to schedule it every 5 mins and it would certainly work well, but it's your monitoring use case that drives the frequency. For most systems, I presume once a minute is a good metric.

I picked Limits as a good starting point for this project especially because I don't quite like emails as a standard alerting mechanism for API limits. Instead of dealing with just the API limits, I extended the scope to cover all the items supported by the Limits API.

All code for this is in GitHub.

Let's do it

Broadly, the solution consists of:

- Fetching the limits

- Evaluating if limits are over a threshold; and

- Sending an alert message to a Slack channel

Fetching the limits

Salesforce provides Limits resource that encapsulates some of the governor limits. It's available in two formats:

🔶 Apex class

The Limits class has methods to list a lot of limits, but this isn't as extensive as the Metadata API. Perhaps Salesforce has plans to keep it in sync with Metadata API, but not yet at the time of writing this. This article has since been edited in favour of OrgLimits class which enapsulates all the limits we are after.

🔶 Metadata API

The Metadata API is a reliable way to fetch the limits, but each call to fetch the limits counts against the Governor limits.

I decided to use the Metadata API as it offers a lot more Limits to alert than the Apex class. Even if we schedule the alerting mechanism to run every minute, that'll be just 1440 API calls that are used in 24hrs which, in my opinion, is a very small price to pay for the benefits.

This article has since been edited in favour of OrgLimits class. While Metadata API can be used to fetch the limits, it relies on calling an API, albeit to the same org.

The AlertManagerSchedulable is the entry point for starting the process. When scheduled, this class will check if there are any alerts (by implementing the shouldAlert interface) and will then invoke them if there are any. The shouldAlert method in turn uses LimitsServiceImpl to fetch the limits using OrgLimits class.

Evaluating if limits are over a threshold

The OrgLimits response, if converted to a JSON, looks like this:

{ ... ... "DailyApiRequests": { "Max": 15000, "Remaining": 14998 }, "DailyAsyncApexExecutions": { "Max": 250000, "Remaining": 250000 }, "DailyBulkApiRequests": { "Max": 5000, "Remaining": 5000 }, ... ...}The code goes over all the items returned in the Limits API call and just compares the Max to Remaining. All we need is to identify if the difference between Max and Remaining is greater than a threshold.

Configurable thresholds

The limit thresholds can be set in the Custom Metadata Config__mdt. A default value of 70% for WARNING and 90% for SEVERE messages is already configured in the metadata with the name All. If a particular limit needs to be changed, a new metadata record can be introduced with that name.

For example, if storage space is already at 85% and you'd like to be alerted only at 95% and 99% for warning and severe messages, respectively, then you'd need to add a new Custom Metadata record for Config__mdt. The key would be DataStorageMB (same as what the API returns), and values would be 0.95 and 0.99 for Warning__c and Severe__c respectively. This is also present as an example record in the customMetadata directory.

Now that we know if there are any limits that need to be alerted, we build a message to be sent to the Slack channel.

Sending an alert message to a Slack channel

Turns out, that Slack has a very simple integration mechanism. An HTTP POST does the job! The request body looks like:

{ "channel": "the slack channel where the message needs to be sent", "username": "the username to be displayed", "text": "the text to be displayed. This will be the one that shows up on the Slack channel", "icon_emoji": "the name of an emoji icon in your slack org"}We'd need a WebHook for it though, here's how to get it:

- Login to the Slack account online via the browser

- Navigate to Menu> Configure Apps> Incoming WebHooks> New Configuration

- Enter channel name or create a new channel and click

Add Incoming WebHooks integration

That's it, we'd be presented with a web hook url which needs to be copied into the Custom Metadata's Alert URL. There's also some friendly info and a curl that we can use to test it out as well. Here's a sample from Slack's documentation (after we've changed the channel name and web hook URL of course):

curl \-X POST \--data-urlencode "payload={\"channel\": \"#channel-name-here\", \"username\": \"webhookbot\", \"text\": \"This is posted to #channel-name-here and comes from a bot named webhookbot.\", \"icon_emoji\": \":ghost:\"}" \https://hooks.slack.com/services/CHANGMEWrapping it up

When we deploy the code mentioned in this GitHub repo to our Salesforce instance, we are ready to roll. We need a Slack channel name, an icon emoji (which can be left to :ghost), the web hook URL and then configure the Custom Metadata Type Config > Slack with those values. When we schedule the alerting job, it'll fetch limits, then compare it against the % threshold we've set and then send an API to Slack WebHook URL to create alerts!

P.S. The code can be extended for other alerts and integrations

Next: